How Well Do You Speak English? Assessing the Validity of the American Community Survey English-Ability Question

How Well Do You Speak English? Assessing the Validity of the American Community Survey English-Ability Question

Erik Vickstrom, Social, Economic, and Housing Statistics Division

Using data from the American Community Survey, the Census Bureau estimated that 60.4 million, or 20.7 percent of the population, spoke a language other than English at home in 2013.

Of these people, 58.3 percent reported speaking English “very well.” The Census Bureau generates statistics on English ability by asking respondents who report speaking a language other than English at home to indicate how well they speak English: “very well,” “well,” “not well” or “not at all.” Federal programs use data from this English-speaking-ability question for three purposes:

- To decide which localities are required to produce voting materials in minority languages.

- To determine the number of school-age English-language learners in each state.

- To determine which governmental programs should provide assistance to those who have limited ability to communicate in English.

A new working paper investigates whether this question and its self-assessment of English ability actually measure an individual’s real English ability.

Researchers at the Census Bureau have been interested in measuring English ability since the question first appeared on the 1980 Census questionnaire. In 1982, results from the Census Bureau’s English Language Proficiency Study showed a strong correlation between the English-speaking-ability question and English-proficiency test scores. In addition, results from the 1986 National Content Test found a positive correlation between spoken English ability and reading and writing ability in English. More recently, a National Research Council report called for additional research on the accuracy of this survey item.

My research seeks to provide insights into the validity of the Census Bureau’s American Community Survey English-speaking-ability question by comparing self-assessments of English ability with objective tests of English literacy. Using data from the National Assessment of Adult Literacy, conducted in 2003 by the National Center for Education Statistics, I find that self-reported English ability is consistent with objective measures of literacy.

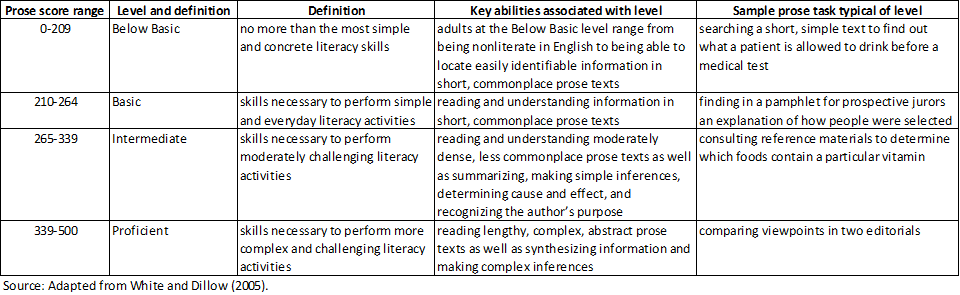

Table 1: Descriptions of prose proficiency levels, National Assessment of Adult Literacy

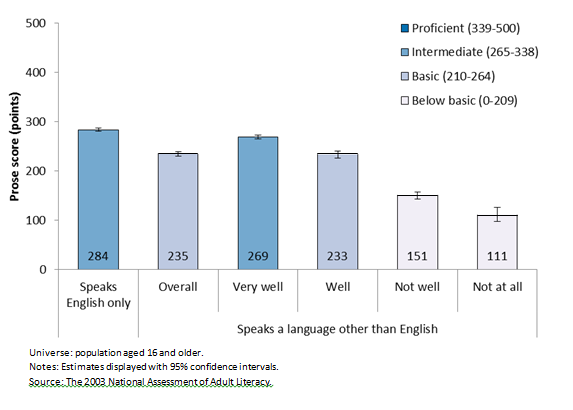

Figure 1: Average prose literacy scores by self-reported English ability, National Assessment of Adult Literacy

Respondents to the National Assessment of Adult Literacy completed a literacy assessment that drew from 152 open-ended questions that simulated real-life situations. While these tests allowed the assessment of prose, document and quantitative literacy, my research focused only on prose literacy scores. The prose assessment, which sought to measure the language skills necessary for effective access to public service programs, seemed most comparable to the adult English proficiency tests on the English Language Proficiency Study.

The prose literacy assessment allowed the calculation of a prose score of between 0 and 500 for respondents. The literacy scores correspond to four performance levels: “below basic,” “basic,” “intermediate” and “proficient.” Table 1 shows these performance levels with the corresponding ranges of literacy scores, key abilities and sample tasks for each level. For example, respondents scoring in the “proficient” prose-score range are able to compare viewpoints in two editorials, while those scoring in the “intermediate” prose-score range are able to consult reference materials to determine which foods contain a particular vitamin.

Figure 1 shows the relationship between self-assessed English ability and prose scores among National Assessment of Adult Literacy respondents as measured by the literacy assessment. Respondents who reported speaking only English score 284 on the prose literacy assessment, indicating that they have, on average, intermediate proficiency. Those speakers of languages other than English who report speaking English “very well” have an average prose-literacy score of 269. This score falls in the intermediate performance level. In contrast, speakers of languages other than English who report speaking English “well” have an average score of 233, which puts them in the basic performance level. Those who reported speaking English “not well” or “not at all” scored 151 and 111, respectively, and both groups fall in the below-basic performance level.

Speakers of non-English languages who report speaking English “very well,” like English-only speakers, have average prose-literacy scores that fall into the intermediate performance level. Prose literacy at this level indicates abilities sufficient to read and understand moderately dense, less commonplace prose texts. Thus, the speakers with the best self-reported English ability can perform the same key tasks as the average English-only speaker. Speakers of languages other than English reporting an English ability of less than “very well,” in contrast, have lower language skills on average.

These results suggest that the English-ability question, despite being a self-assessment, does a good job of measuring English ability.